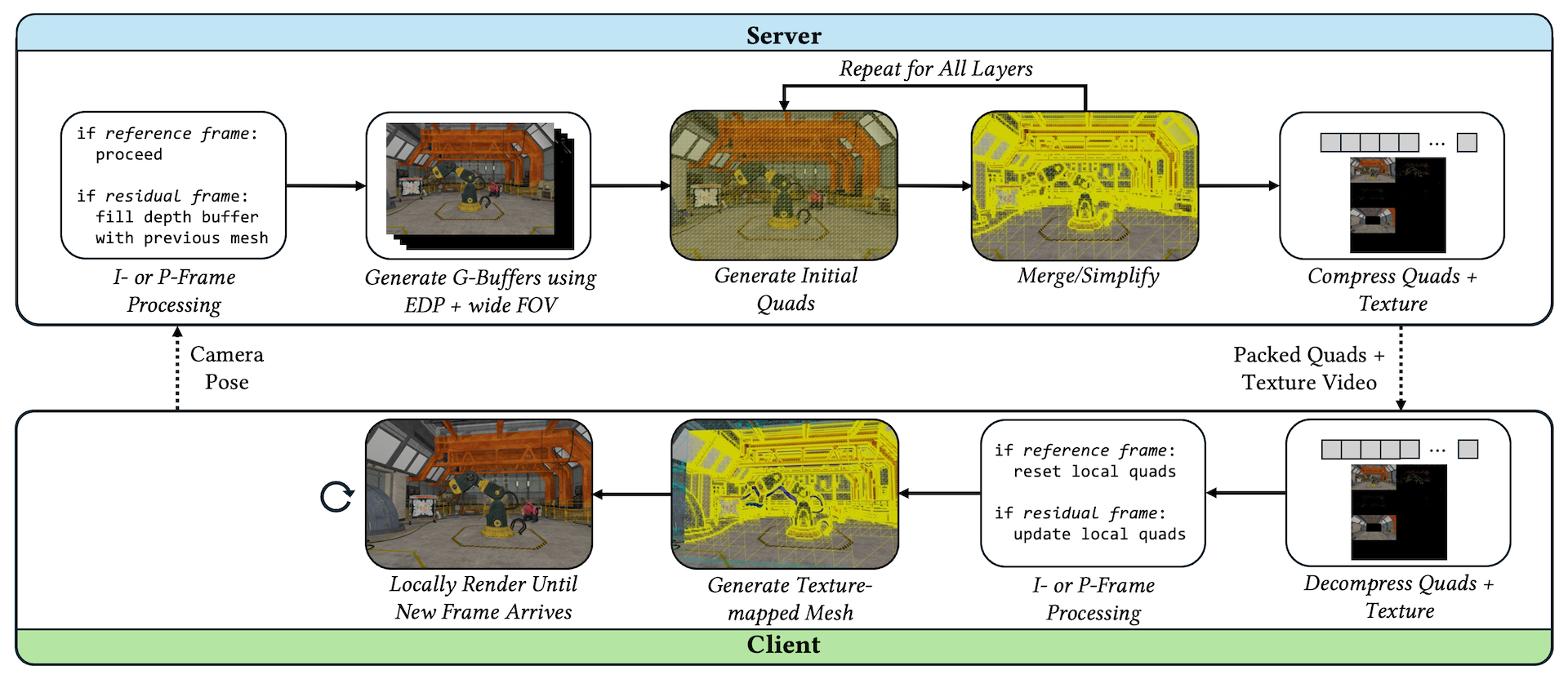

QUASAR is a remote rendering system that represents scene views using pixel-aligned quads, enabling temporally consistent and bandwidth-adaptive streaming for high-quality, real-time visualization on thin clients.

Our repository below provides baseline implementations of remote rendering systems designed to support and accelerate research in the field. For detailed discussion on the design principles, implementation details, and benchmarks, please see our paper:

QUASAR: Quad-based Adaptive Streaming And Rendering

Edward Lu and Anthony Rowe

ACM Transactions on Graphics 44(4) (proc. SIGGRAPH 2025)

Paper: https://quasar-gfx.github.io/assets/quasar_siggraph_2025.pdf

GitHub (Main): https://github.com/quasar-gfx/QUASAR

GitHub (Client): https://github.com/quasar-gfx/QUASAR-client

To download the OpenXR client code, clone the repository at https://github.com/quasar-gfx/QUASAR-client using git:

git clone --recursive https://github.com/quasar-gfx/QUASAR-client.gitIf you accidentally cloned the repository without --recursive, you can do:

git submodule update --init --recursive

Note: This code has been tested on Meta Quest 2/3/Pro.

The following steps assume you have the latest Android Studio installed on your computer:

QuestClientApps/build.gradle in the QUASAR-client repository in Android Studio, select an app in the Configurations menu at the top (dropdown to the left of Run button), and click the Run button to build and upload (first time opening and building may take a while).

QuestClientApps/Apps/ folders. For example, QuestClientApps/Apps/QUASARViewer/build.gradleIf there are any issues with Android Studio not finding shaders after pulling updated code from GitHub, delete the .cxx directories with rm -rf QuestClientApps/Apps/**/.cxx, resync the project, and rerun.

All apps allow you to move through a scene using the controller joysticks. You will move in the direction you are looking.

The Scene Viewer app loads a JSON scene to view on the headset (you can download example models from this link).

Download and unzip into QuestClientApps/Apps/SceneViewer/assets/models/scenes/ (this will be gitignored).

Note: You can only have ONE glb in the assets/models/scenes/ directory at once since Android will run out of storage if you have them all. So, just copy RobotLab.glb in scenes/.

The ATW Client app allows the headset to act as a receiver for a stereo video stream from the server sent across a network. The headset will reproject each eye using a homography to warp the images along a plane.

First, open QuestClientApps/Apps/ATWClient/include/ATWClient.h and change:

std::string serverIP = "192.168.22.227";

to your server's IP address. Then, on the QUASAR repository, build and run:

# in build directory

cd apps/atw/streamer

./atw_streamer --size 3840x1080 --scene ../assets/scenes/robot_lab.json --video-url <headset's IP>:12345 --vr

To get your headset's IP address, run:

adb shell ip addr show wlan0 # look for address after 'inet'

in a terminal with your headset connected. Make sure you have adb installed.

The MeshWarp Viewer app will load a saved static frame from MeshWarp to view on the headset. You can change which scene is loaded by editing:

std::string sceneName = "robot_lab"; // choose from robot_lab, robot_lab_transparent, sun_temple, viking_village, or san_miguel

in QuestClientApps/Apps/MeshWarpViewer/include/MeshWarpViewer.h.

The MeshWarp Client app allows the headset to act as a receiver for a video and depth stream from the server sent across a network. The headset will reconstruct a mesh using the depth stream and texture map it with the video for reprojection.

First, open QuestClientApps/Apps/MeshWarpClient/include/MeshWarpClient.h and change:

std::string serverIP = "192.168.22.227";

to your server's IP address. Then, on the QUASAR repository, build and run:

# in build directory

cd apps/meshwarp/streamer

./mw_streamer --size 1920x1080 --scene ../assets/scenes/robot_lab.json --video-url <headset's IP>:12345 --depth-url <server's IP>:65432 --depth-factor 8

The Quads Viewer app will load a saved static frame from QuadWarp to view on the headset. You can change which scene is loaded by editing:

std::string sceneName = "robot_lab"; // choose from robot_lab, robot_lab_transparent, sun_temple, viking_village, or san_miguel

in QuestClientApps/Apps/QuadsViewer/include/QuadsViewer.h.

The Quads Client app allows the headset to act as a receiver for a video and quad proxy metadata stream from the server sent across a network. The headset will reconstruct a mesh with the quads from the metadata stream and texture map it with the video for reprojection.

First, open QuestClientApps/Apps/QuadsClient/include/QuadsClient.h and change:

std::string serverIP = "192.168.22.227";

to your server's IP address. Then, on the QUASAR repository, build and run:

# in build directory

cd apps/quadwarp/streamer

./quads_streamer --size 1920x1080 --scene ../assets/scenes/robot_lab.json --pose-url 0.0.0.0:54321 --video-url <headset's IP>:12345 --proxies-url <server's IP>:65432

The QuadStream Viewer app will load a saved static frame from QuadStream to view on the headset. You can change which scene is loaded by editing:

std::string sceneName = "robot_lab"; // choose from robot_lab, sun_temple, viking_village, or san_miguel

in QuestClientApps/Apps/QuadStreamViewer/include/QuadStreamViewer.h.

The QUASAR Viewer app will load a saved static frame from QUASAR to view on the headset. You can change which scene is loaded by editing:

std::string sceneName = "robot_lab"; // choose from robot_lab, robot_lab_transparent, sun_temple, viking_village, or san_miguel

in QuestClientApps/Apps/QUASARViewer/include/QUASARViewer.h.

The QUASAR Client app allows the headset to act as a receiver for a video and quad proxy metadata stream from the server sent across a network. The headset will reconstruct a layered mesh with the quads from the metadata stream and texture map it with the video for reprojection.

First, open QuestClientApps/Apps/QUASARClient/include/QUASARClient.h and change:

std::string serverIP = "192.168.22.227";

to your server's IP address. Then, on the QUASAR repository, build and run:

# in build directory

cd apps/quasar/streamer

./qr_streamer --size 1920x1080 --scene ../assets/scenes/robot_lab.json --pose-url 0.0.0.0:54321 --video-url <headset's IP>:12345 --proxies-url <server's IP>:65432 --remote-fov-wide 140 --view-sphere-diameter 1.0

To wirelessly connect to your headset, type this into your terminal with your headset plugged in:

adb tcpip 5555

adb connect <headset's ip address>:5555

Now, you don't need to plug in your headset to upload code!

To debug/view print statements, see the Logcat tab on Android Studio if the headset is connected.

A majority of the OpenXR code is based on the OpenXR Android OpenGL ES tutorial.

If you find this project helpful for any research-related purposes, please consider citing our paper:

@article{lu2025quasar,

title={QUASAR: Quad-based Adaptive Streaming And Rendering},

author={Lu, Edward and Rowe, Anthony},

journal={ACM Transactions on Graphics (TOG)},

volume={44},

number={4},

year={2025},

publisher={ACM New York, NY, USA},

url={https://doi.org/10.1145/3731213},

doi={10.1145/3731213},

}

We gratefully acknowledge the authors of QuadStream and PVHV for their foundational ideas, which served as valuable inspiration for our work.

This work was supported in part by the NSF under Grant No. CNS1956095, the NSF Graduate Research Fellowship under Grant No. DGE2140739, and Bosch Research.

Special thanks to Ziyue Li and Ruiyang Dai for helping on the implementation!

This webpage is adapted from nvdiffrast. We sincerely appreciate the authors for open-sourcing their code.