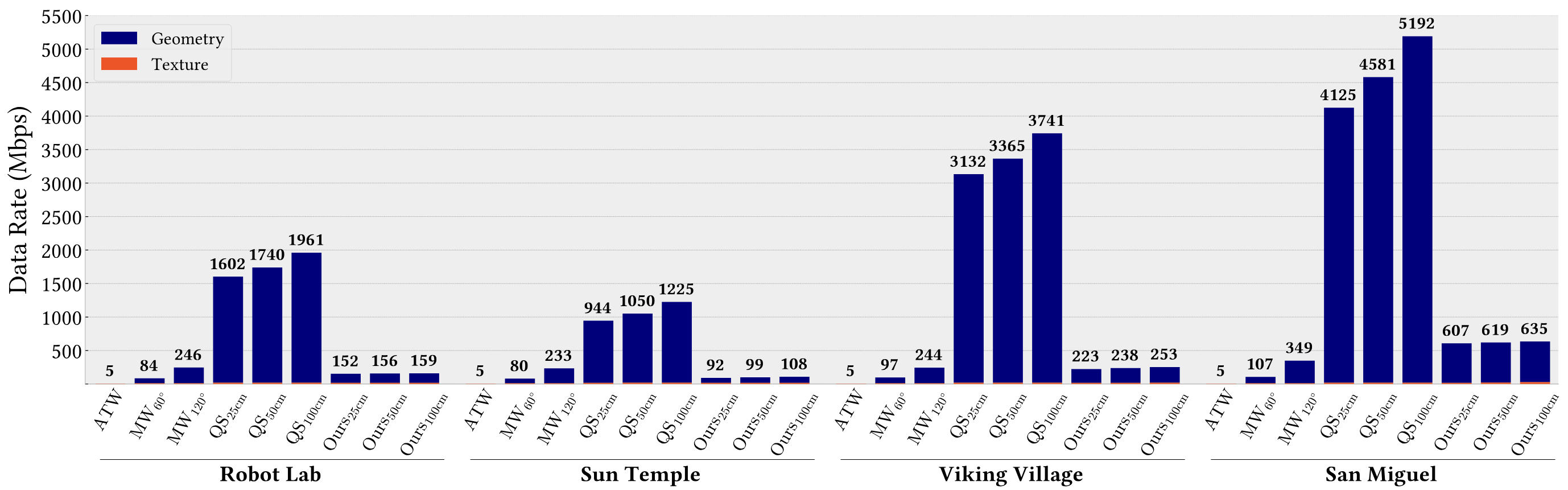

Average data rates of all methods for each trace in our evaluations. Results for QuadStream and our technique are shown for three different viewcell sizes to highlight the effect of viewcell size on data rate. Reported values are the average of data rates across both tested latencies.

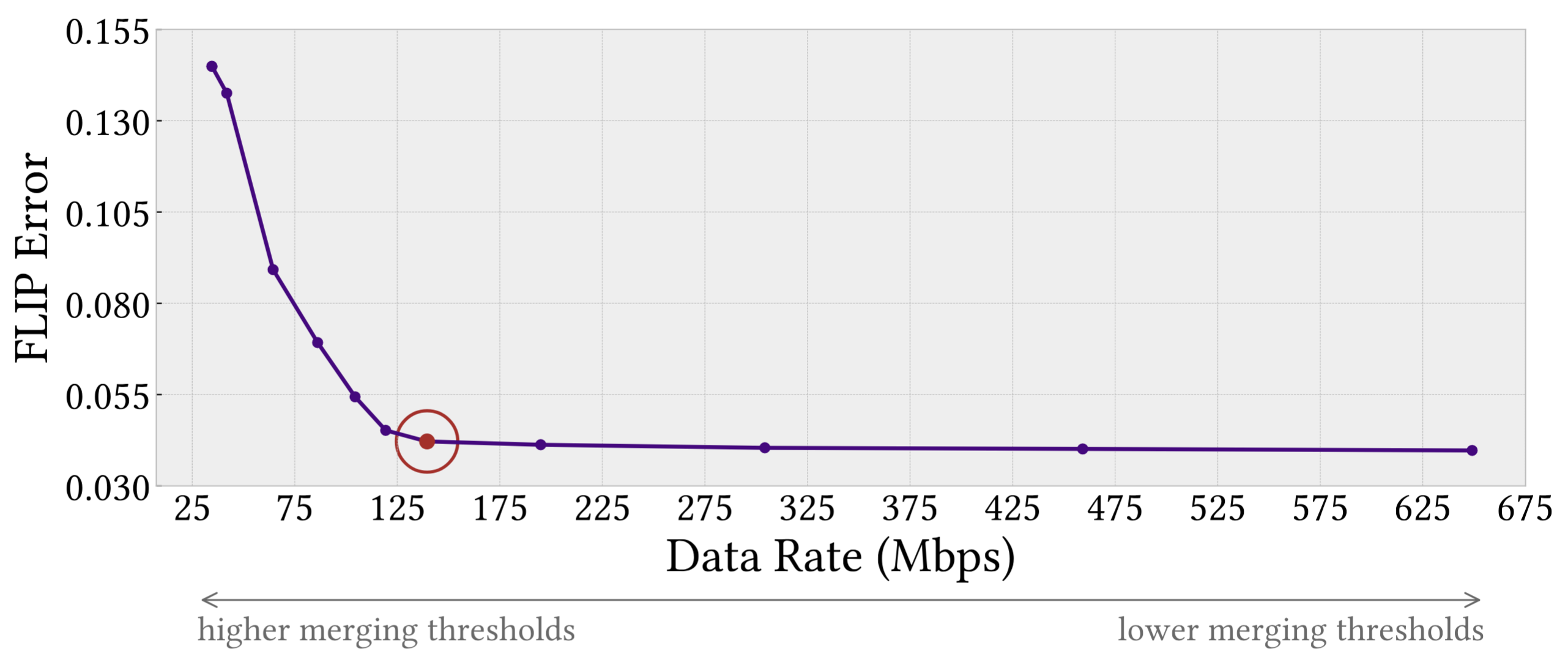

Effect of quad merging on visual quality and data rate for the Robot Lab scene (w/ 50 cm viewcell). Trials vary quad merging thresholds, impacting geometric resolution and compression efficiency. Parameter values between adjacent points differ by a factor of 2. The chosen operating point (𝛿_sim=0.5 and 𝛿_flatten=0.2) is highlighted.

@article{lu2025quasar,

title={QUASAR: Quad-based Adaptive Streaming And Rendering},

author={Lu, Edward and Rowe, Anthony},

journal={ACM Transactions on Graphics (TOG)},

volume={44},

number={4},

year={2025},

publisher={ACM New York, NY, USA},

url={https://doi.org/10.1145/3731213},

doi={10.1145/3731213},

}