As AR/VR systems evolve to demand increasingly powerful GPUs, physically separating compute from display hardware emerges as a natural approach to enable a lightweight, comfortable form factor. Unfortunately, splitting the system into a client-server architecture leads to challenges in transporting graphical data. Simply streaming rendered images over a network suffers in terms of latency and reliability, especially given variable bandwidth. Although image-based reprojection techniques can help, they often do not support full motion parallax or disocclusion events. Instead, scene geometry can be streamed to the client, allowing local rendering of novel views. Traditionally, this has required a prohibitively large amount of interconnect bandwidth, excluding the use of practical networks.

This paper presents a new quad-based geometry streaming approach that is designed with compression and the ability to adjust Quality-of-Experience (QoE) in response to target network bandwidths. Our approach advances previous work by introducing a more compact data structure and a temporal compression technique that reduces data transfer overhead by up to 15×, reducing bandwidth usage to as low as 100 Mbps. We optimized our design for hardware video codec compatibility and support an adaptive data streaming strategy that prioritizes transmitting only the most relevant geometry updates. Our approach achieves image quality comparable to, and in many cases exceeds, state-of-the-art techniques while requiring only a fraction of the bandwidth, enabling real-time geometry streaming on commodity headsets over WiFi.

Our quad-based representation achieves up to 75 FPS on mobile GPUs and 6000 FPS on high-end GPUs at 1920×1080 resolution.

The videos above show a frozen frame rendered on a Meta Quest 3 VR headset.

As the user moves within the viewcell, disocclusion events are captured, allowing scene viewing from different angles with motion parallax.

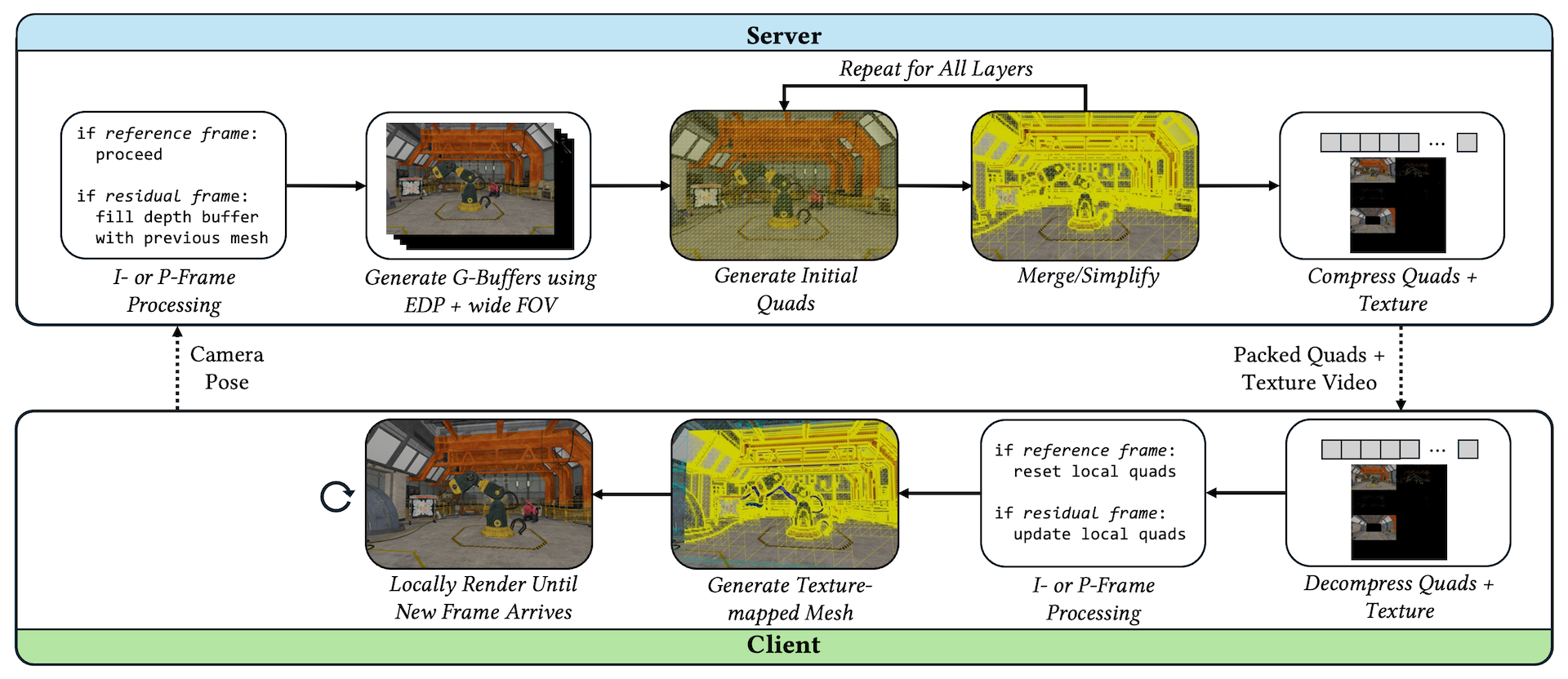

Our remote rendering system uses a quad-based representation of the scene, which is optimized for streaming and rendering. It consists of two main components: a server that approximates a series of layered G-Buffers using pixel-aligned quads, and a client that reconstructs potentially visible scene geometry and renders novel views. Network latency is masked through frame extrapolation, allowing the client to render frames at a high framerate while the server transmits scene updates.

We support client movement along a virtual sphere-shaped viewcell, meaning that the client should be able to move freely around a fixed radius from the pose of a server frame with disocclusion events captured. This lets us limit the amount of geometry that needs to be transmitted, as the server only needs to send geometry that is potientially visible from within the viewing sphere. The viewing sphere radius is an adjustable parameter that can be tuned based on available network bandwidth.

To capture potentially visible geometry, we use a technique from prior work called Effective Depth Peeling (EDP) to create layers of G-Buffers. EDP allows only potentially visible fragments to pass, saving bandwidth by avoiding the transmission of fully occluded geometry.

Our quad generation algorithm is inspired by QuadStream. For each G-Buffer layer, we fit a set of merged quads to the visible fragments. If adjacent quads lie along the same plane, they are progressively merged into a single quad. The amount of quad merging is also an adjustable parameter for bandwidth availability.

Our main contributions include improvements to QuadStream's potential visibility and quad generation algorithms that allow us to fit quads that are more compact and easier to render, a temporal compression technique that dramatically reduces the amount of data that needs to be transmitted, and a discussion on how our technique can be extended for network rate-adaptation for Quality-of-Experience (QoE) optimization. Our approach can capture motion parallax, disocclusion events, and transparency effects.

We open-source all our code to provide system primatives and baselines to accelerate remote rendering research (see Code link at the top of the page). Our implementation supports desktops (as servers/clients), laptops (as clients), and mobile headsets (as clients).

Instead of sending entirely new sets of quads for each frame, our method selectively sends only the quads affected by scene changes and disocclusions.

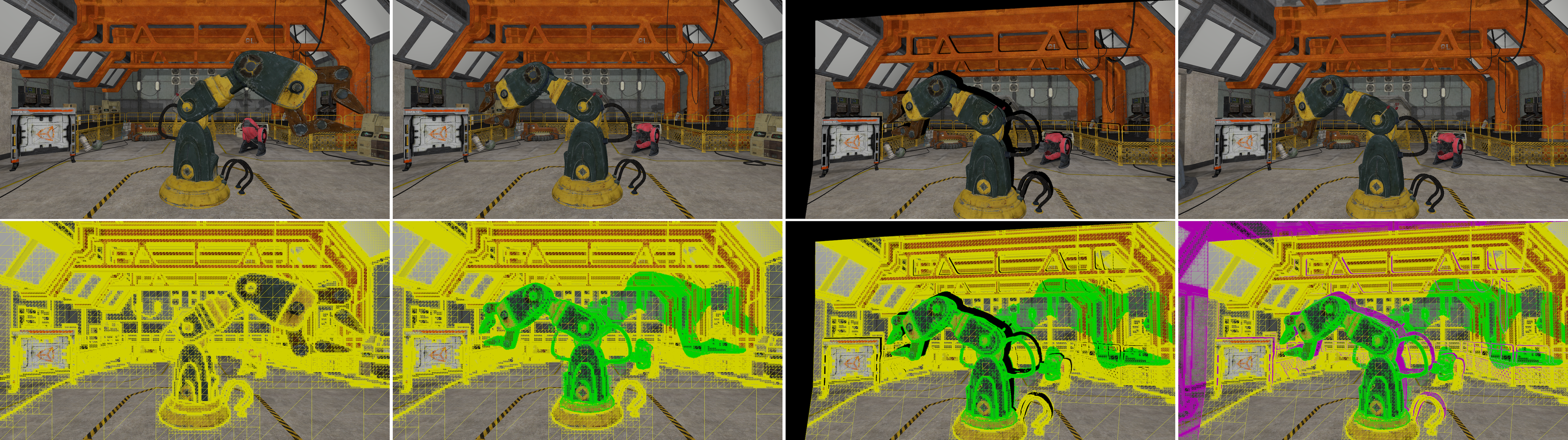

Left to Right: We fit quads to a scene using a series of G-Buffers (yellow), which are sent to a client to reconstruct and render (called the reference frame). To reduce bandwidth usage, we selectively only transmit quads that capture scene geometry changes (due to animations, etc.) from the reference viewpoint (green) and newly revealed regions due to disocclusions caused by camera movement (black regions). The server sends only these residual quads (green and magenta) (called the residual frame), which the client integrates with the cached quads from the reference frame.

Reference frames are periodically sent to the client at a fixed interval; every reference frame is followed by a series of residual frames.

Please refer to the Results Page for visualizations and comparisons of our method against state-of-the-art techniques.

@article{lu2025quasar,

title={QUASAR: Quad-based Adaptive Streaming And Rendering},

author={Lu, Edward and Rowe, Anthony},

journal={ACM Transactions on Graphics (TOG)},

volume={44},

number={4},

year={2025},

publisher={ACM New York, NY, USA},

url={https://doi.org/10.1145/3731213},

doi={10.1145/3731213},

}